Publications and Patents

CheckNet: Secure Inference on Untrusted Devices

Comiter MZ, Teerapittayanon S, Kung HT

International Conference on Machine Learning and Applications (ICMLA) 2019, December 2019

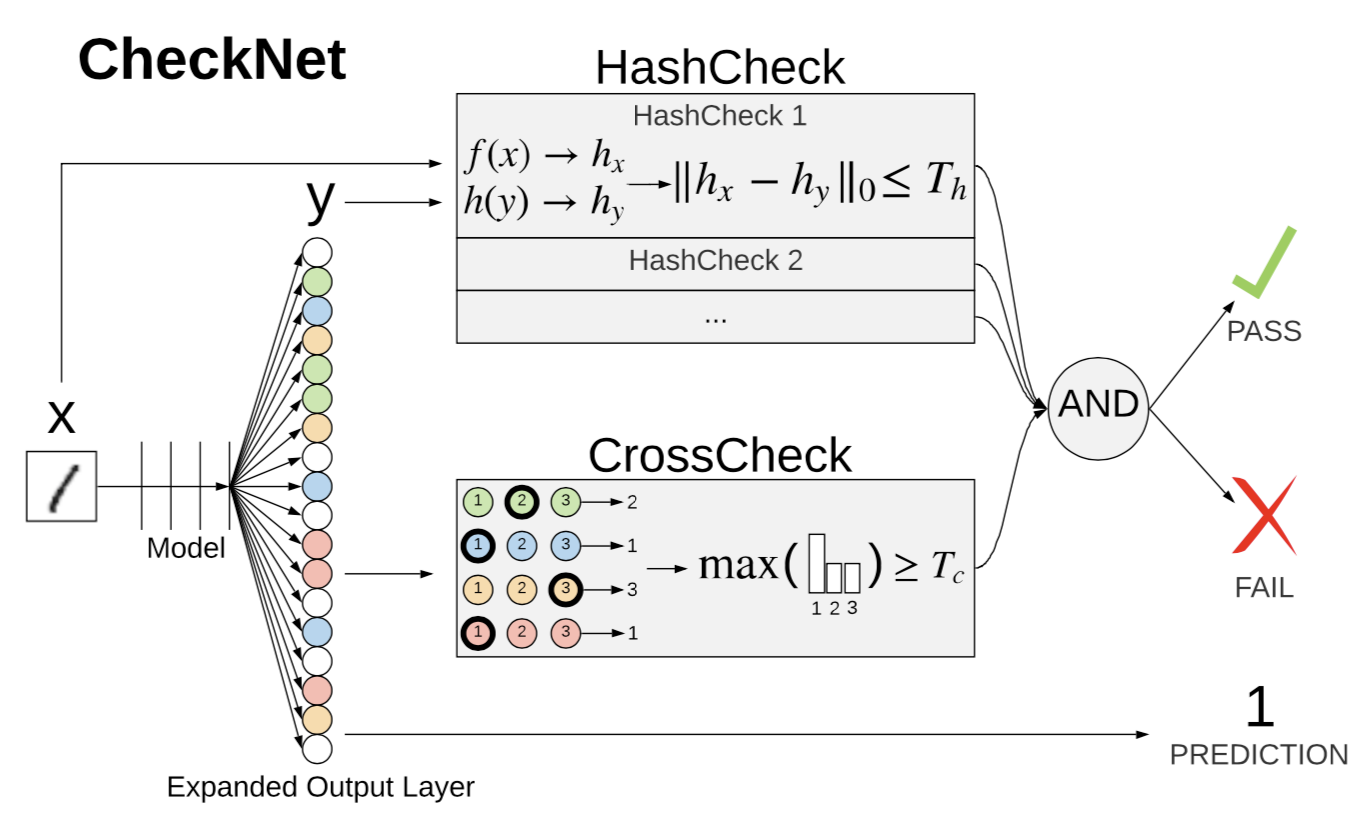

We introduce CheckNet, a method for secure inference with deep neural networks on untrusted devices. CheckNet is like a checksum for neural network inference: it verifies the integrity of the inference computation performed by untrusted devices to 1) ensure the inference has actually been performed, and 2) ensure the inference has not been manipulated by an attacker.

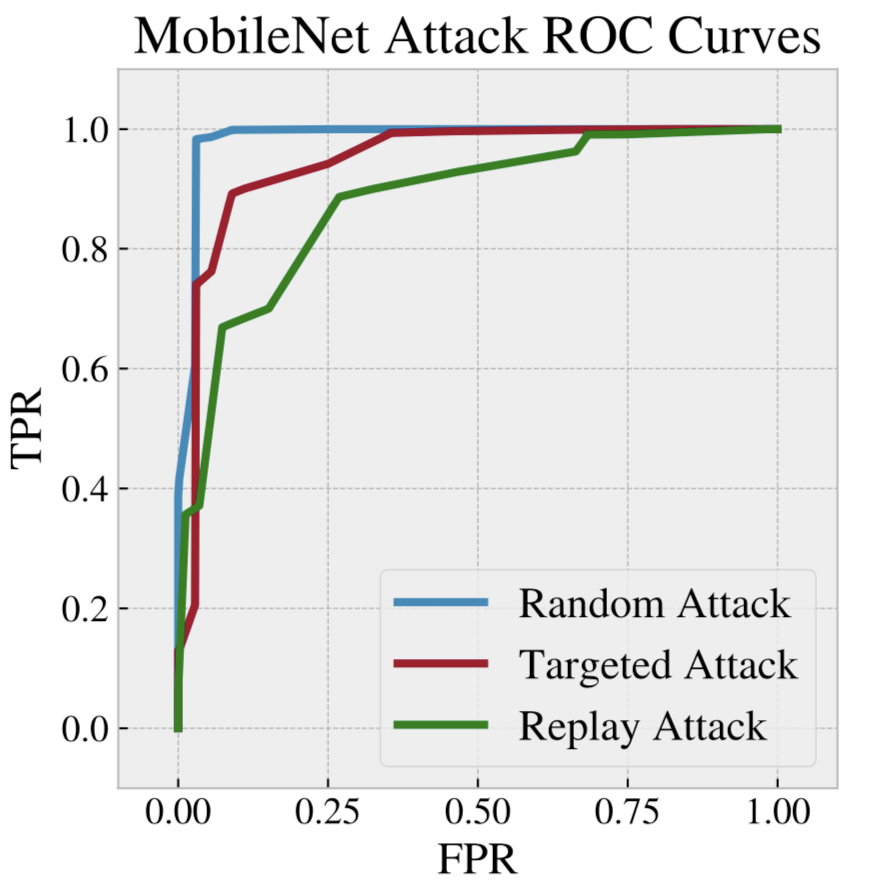

CheckNet is a general method for securing neural network inference computation: it is completely transparent to the third party running the computation, applicable to all types of neural networks, does not require specialized hardware, adds little overhead, and has negligible impact on model performance. CheckNet can be configured to provide different levels of security depending on application needs and compute/communication budgets. We present both empirical and theoretical validation of CheckNet on multiple popular deep neural network models, showing excellent attack detection (0.88-0.99 AUC) and attack success bounds.

Comiter MZ, Teerapittayanon S, Kung HT

International Conference on Machine Learning and Applications (ICMLA) 2019, December 2019

We introduce CheckNet, a method for secure inference with deep neural networks on untrusted devices. CheckNet is like a checksum for neural network inference: it verifies the integrity of the inference computation performed by untrusted devices to 1) ensure the inference has actually been performed, and 2) ensure the inference has not been manipulated by an attacker.

CheckNet is a general method for securing neural network inference computation: it is completely transparent to the third party running the computation, applicable to all types of neural networks, does not require specialized hardware, adds little overhead, and has negligible impact on model performance. CheckNet can be configured to provide different levels of security depending on application needs and compute/communication budgets. We present both empirical and theoretical validation of CheckNet on multiple popular deep neural network models, showing excellent attack detection (0.88-0.99 AUC) and attack success bounds.

Attacking Artificial Intelligence: AI’s Security Vulnerability and What Policymakers Can Do About It

Featured in the Washington Post, September 2019

Marcus Comiter

Belfer Center for Science and International Affairs, August 2019

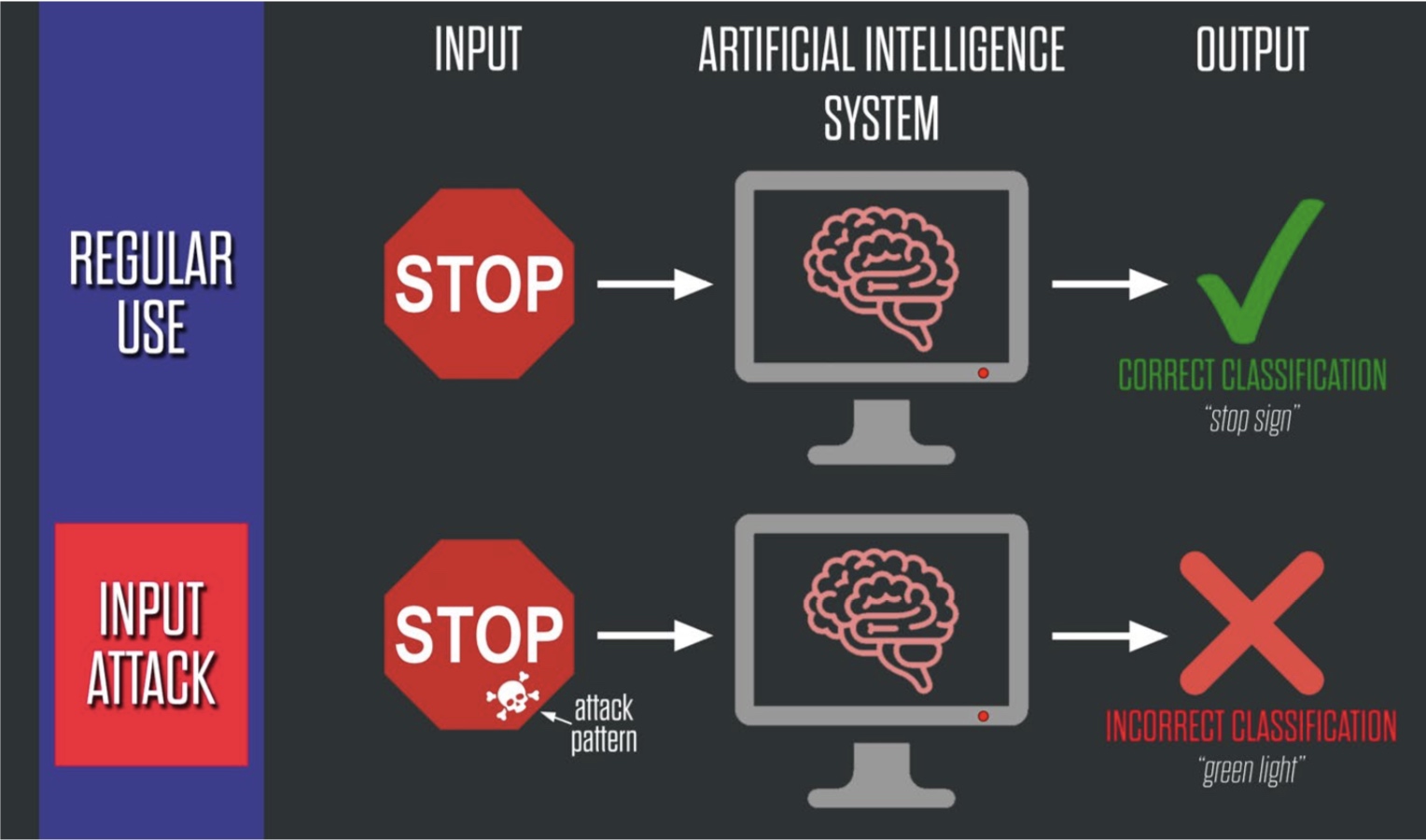

Building artificial intelligence (AI) into critical aspects of society is creating systemic vulnerabilities that can be exploited by adversaries to potentially devastating effect. This report report helps to close the gap between black box Silicon Valley AI magic and the careful thinking that needs to get done by policymakers to address this emerging threat. It explains the underlying vulnerabilities that can be exploited, and explains how unlike traditional cyberattacks that are caused by “bugs” or human mistakes in code, "AI attacks" are enabled by inherent limitations in the underlying AI algorithms. The report then analyzes which critical parts of society are vulnerable to these new types of attacks.

This report proposes “AI Security Compliance” programs to protect against AI attacks. Public policy creating “AI Security Compliance” programs will reduce the risk of attacks on AI systems and lower the impact of successful attacks. Compliance programs would accomplish this by encouraging stakeholders to adopt a set of best practices in securing systems against AI attacks, including considering attack risks and surfaces when deploying AI systems, adopting IT-reforms to make attacks difficult to execute, and creating attack response plans. This program is modeled on existing compliance programs in other industries, such as PCI compliance for securing payment transactions, and would be implemented by appropriate regulatory bodies for their relevant constituents.

Featured in the Washington Post, September 2019

Marcus Comiter

Belfer Center for Science and International Affairs, August 2019

Building artificial intelligence (AI) into critical aspects of society is creating systemic vulnerabilities that can be exploited by adversaries to potentially devastating effect. This report report helps to close the gap between black box Silicon Valley AI magic and the careful thinking that needs to get done by policymakers to address this emerging threat. It explains the underlying vulnerabilities that can be exploited, and explains how unlike traditional cyberattacks that are caused by “bugs” or human mistakes in code, "AI attacks" are enabled by inherent limitations in the underlying AI algorithms. The report then analyzes which critical parts of society are vulnerable to these new types of attacks.

This report proposes “AI Security Compliance” programs to protect against AI attacks. Public policy creating “AI Security Compliance” programs will reduce the risk of attacks on AI systems and lower the impact of successful attacks. Compliance programs would accomplish this by encouraging stakeholders to adopt a set of best practices in securing systems against AI attacks, including considering attack risks and surfaces when deploying AI systems, adopting IT-reforms to make attacks difficult to execute, and creating attack response plans. This program is modeled on existing compliance programs in other industries, such as PCI compliance for securing payment transactions, and would be implemented by appropriate regulatory bodies for their relevant constituents.

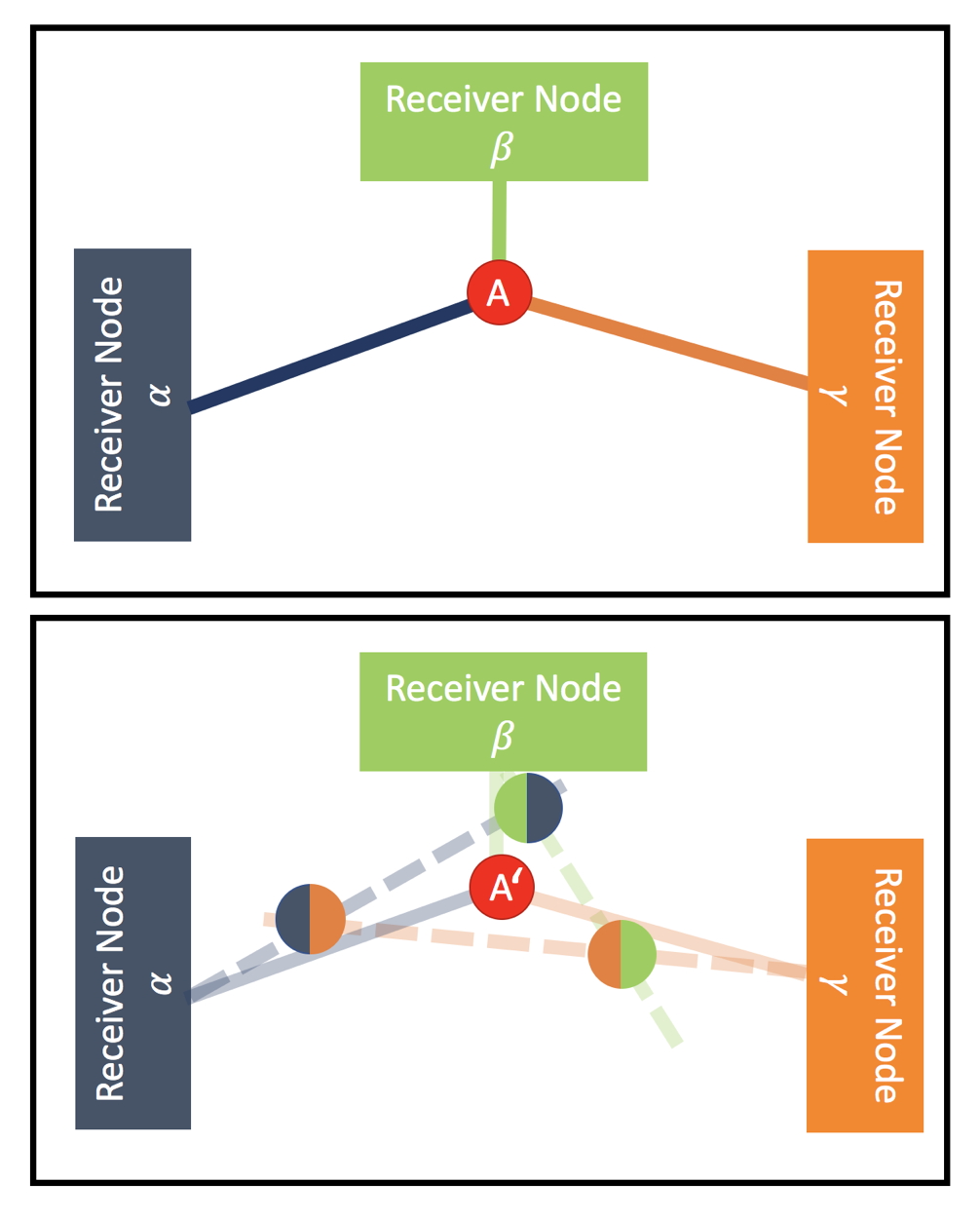

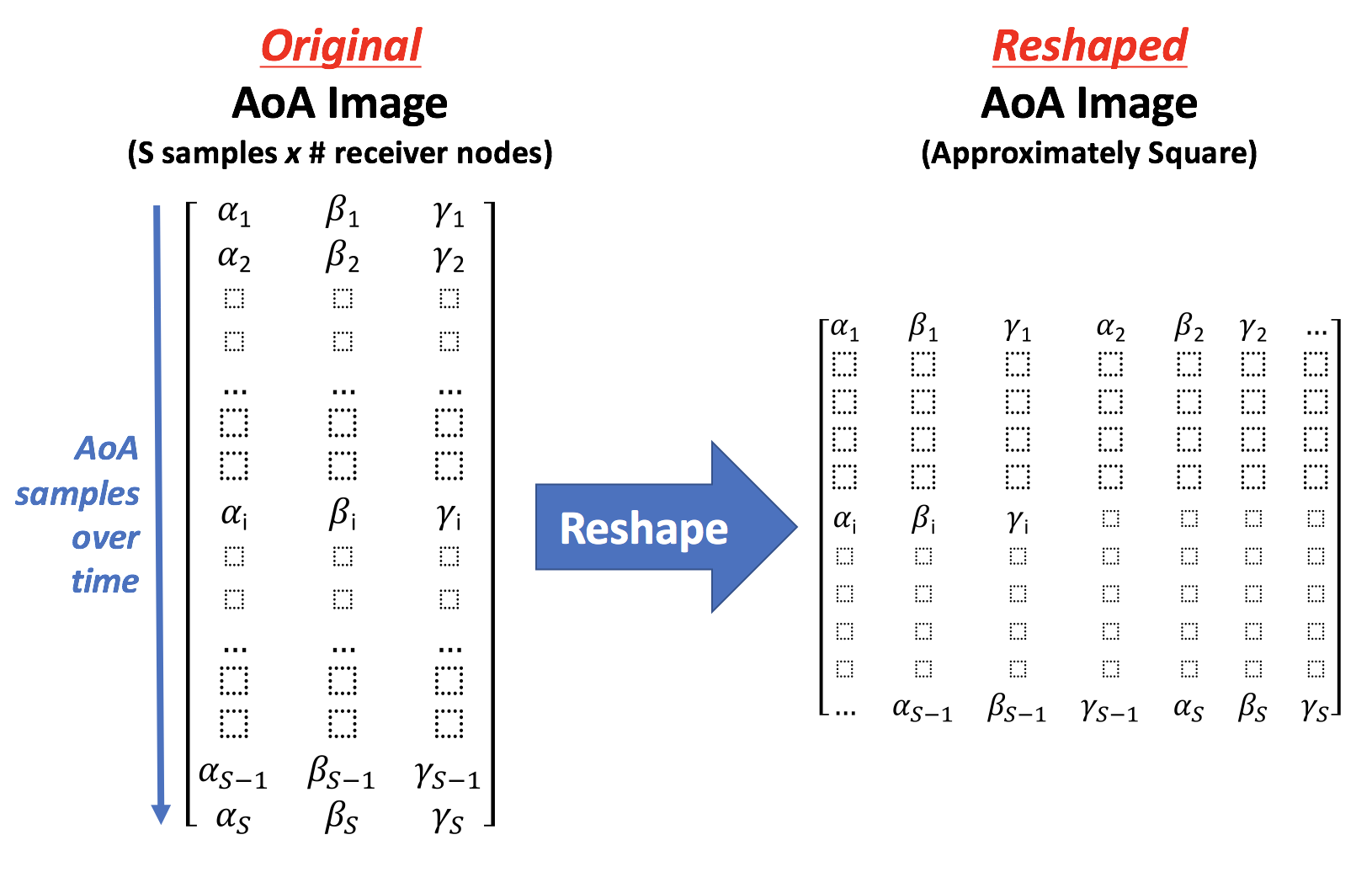

Localization Convolutional Neural Networks Using Angle of Arrival Images

Comiter MZ, Kung HT

IEEE Global Communications Conference (GLOBECOM) 2018, December 2018

We introduce localization convolutional neural networks (CNNs), a data-driven time series-based angle of arrival (AOA) localization scheme capable of coping with noise and errors in AOA estimates measured at receiver nodes. Our localization CNNs enhance their robustness by using a time series of AOA measurements rather than a single-time instance measurement to localize mobile nodes. We analyze real-world noise models, and use them to generate synthetic training data that increase the CNN’s tolerance to noise. This synthetic data generation method replaces the need for expensive data collection campaigns to capture noise conditions in the field. The proposed scheme is both simple to use and also lightweight, as the mobile node to be localized solely transmits a beacon signal and requires no further processing capabilities.

Our scheme is novel in its use of: (1) CNNs operating on space-time AOA images composed of AOA data from multiple receiver nodes over time, and (2) synthetically-generated perturbed training examples obtained via modeling triangulation patterns from noisy AOA measurements. We demonstrate that a relatively small CNN can achieve state-of-the-art localization accuracy that meets the 5G standard requirements even under high degrees of AOA noise. We motivate the use of our proposed localization CNNs with a tracking application for mobile nodes, and argue that our solution is advantageous due to its high localization accuracy and computational efficiency.

Comiter MZ, Kung HT

IEEE Global Communications Conference (GLOBECOM) 2018, December 2018

We introduce localization convolutional neural networks (CNNs), a data-driven time series-based angle of arrival (AOA) localization scheme capable of coping with noise and errors in AOA estimates measured at receiver nodes. Our localization CNNs enhance their robustness by using a time series of AOA measurements rather than a single-time instance measurement to localize mobile nodes. We analyze real-world noise models, and use them to generate synthetic training data that increase the CNN’s tolerance to noise. This synthetic data generation method replaces the need for expensive data collection campaigns to capture noise conditions in the field. The proposed scheme is both simple to use and also lightweight, as the mobile node to be localized solely transmits a beacon signal and requires no further processing capabilities.

Our scheme is novel in its use of: (1) CNNs operating on space-time AOA images composed of AOA data from multiple receiver nodes over time, and (2) synthetically-generated perturbed training examples obtained via modeling triangulation patterns from noisy AOA measurements. We demonstrate that a relatively small CNN can achieve state-of-the-art localization accuracy that meets the 5G standard requirements even under high degrees of AOA noise. We motivate the use of our proposed localization CNNs with a tracking application for mobile nodes, and argue that our solution is advantageous due to its high localization accuracy and computational efficiency.

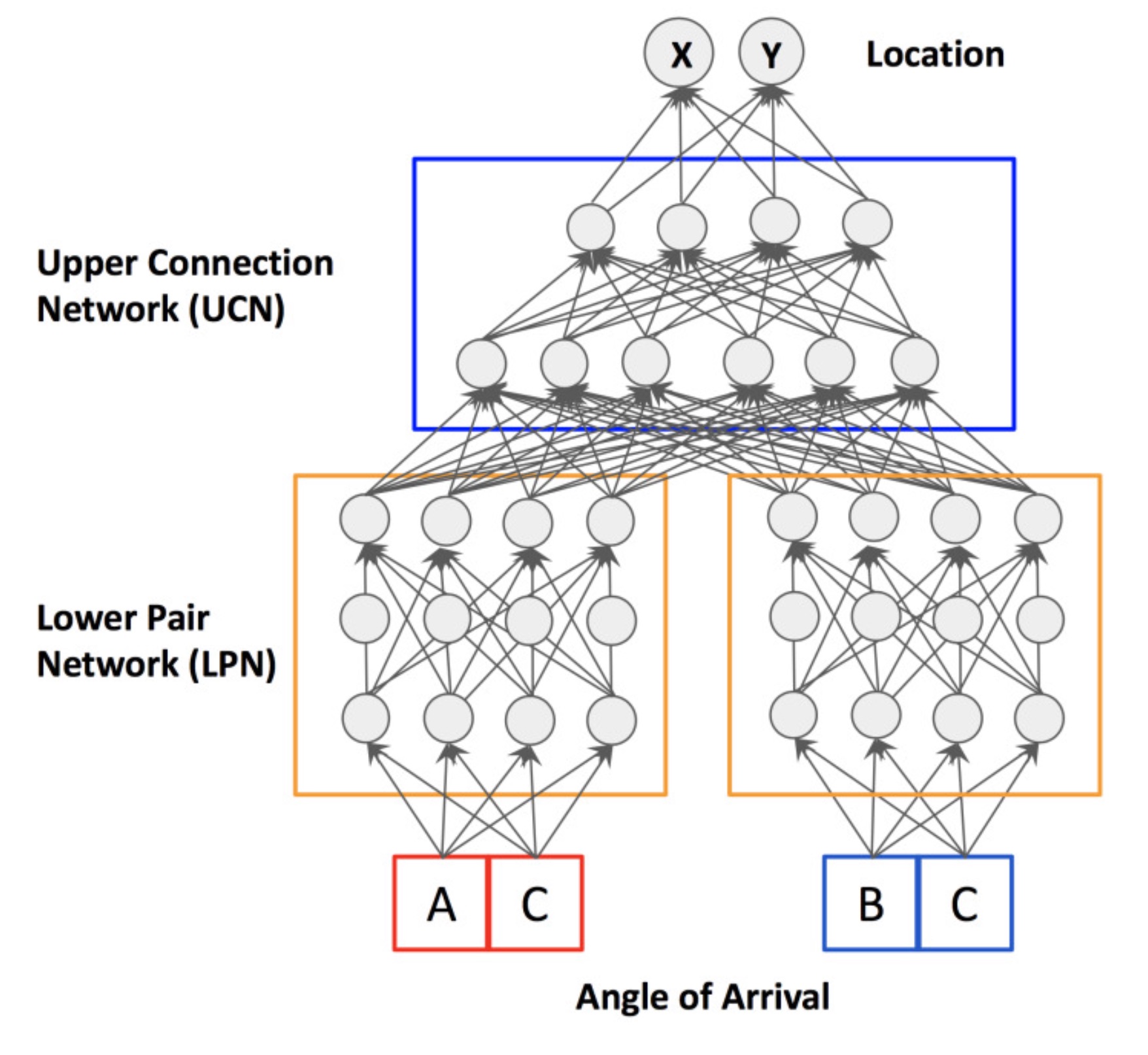

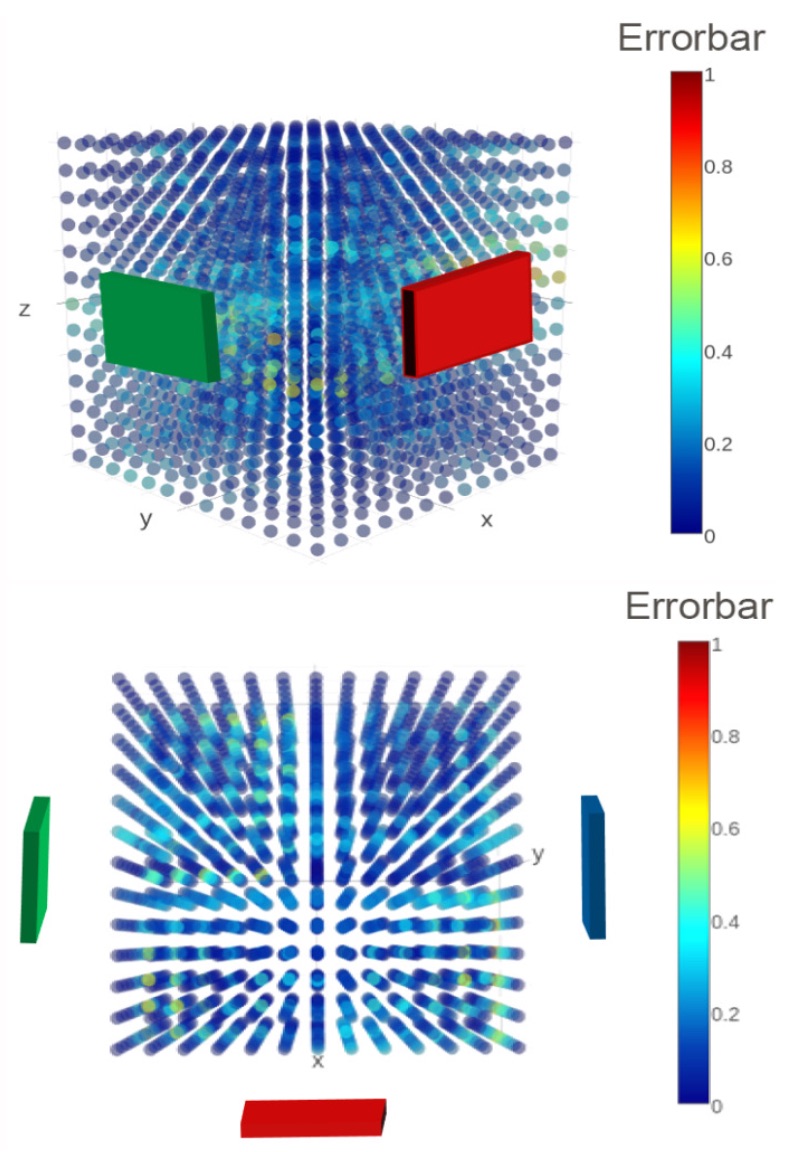

A Structured Deep Neural Network for Data Driven Localization in High Frequency Wireless Networks

Comiter MZ, Crouse MB, Kung HT

International Journal of Computer Networks and Communications (IJCNC), May 2017

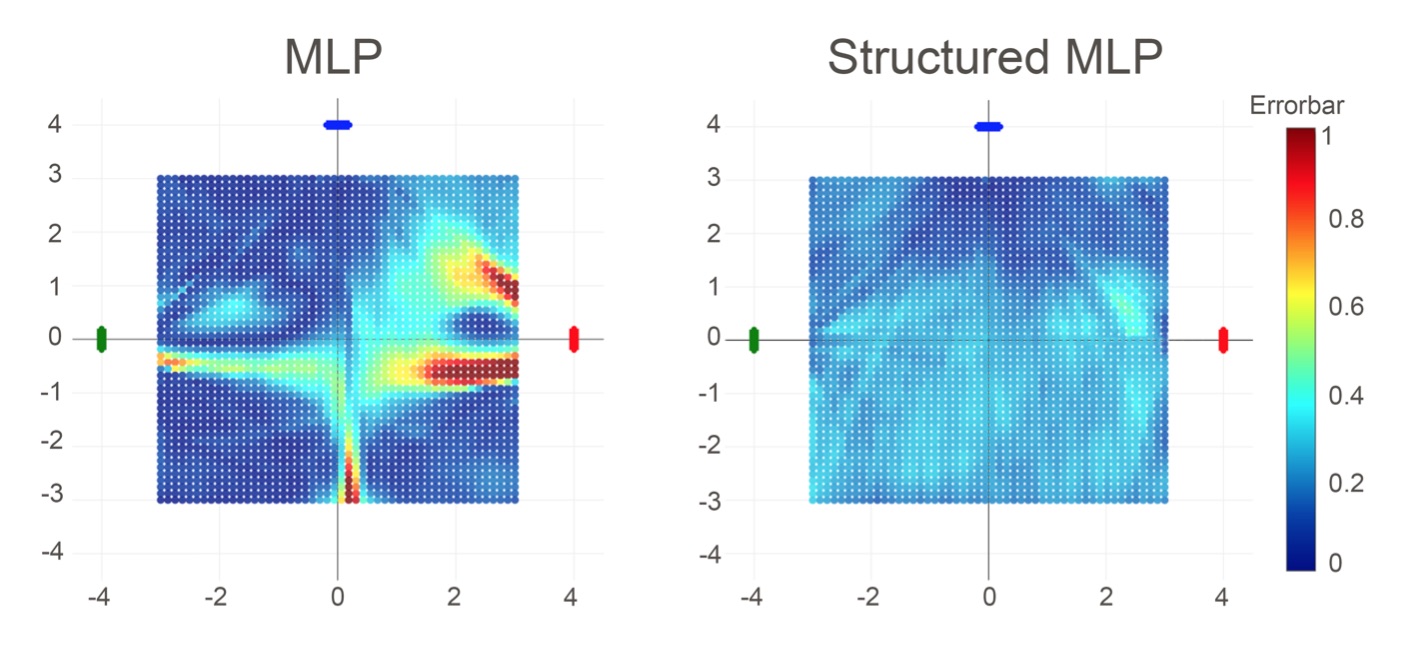

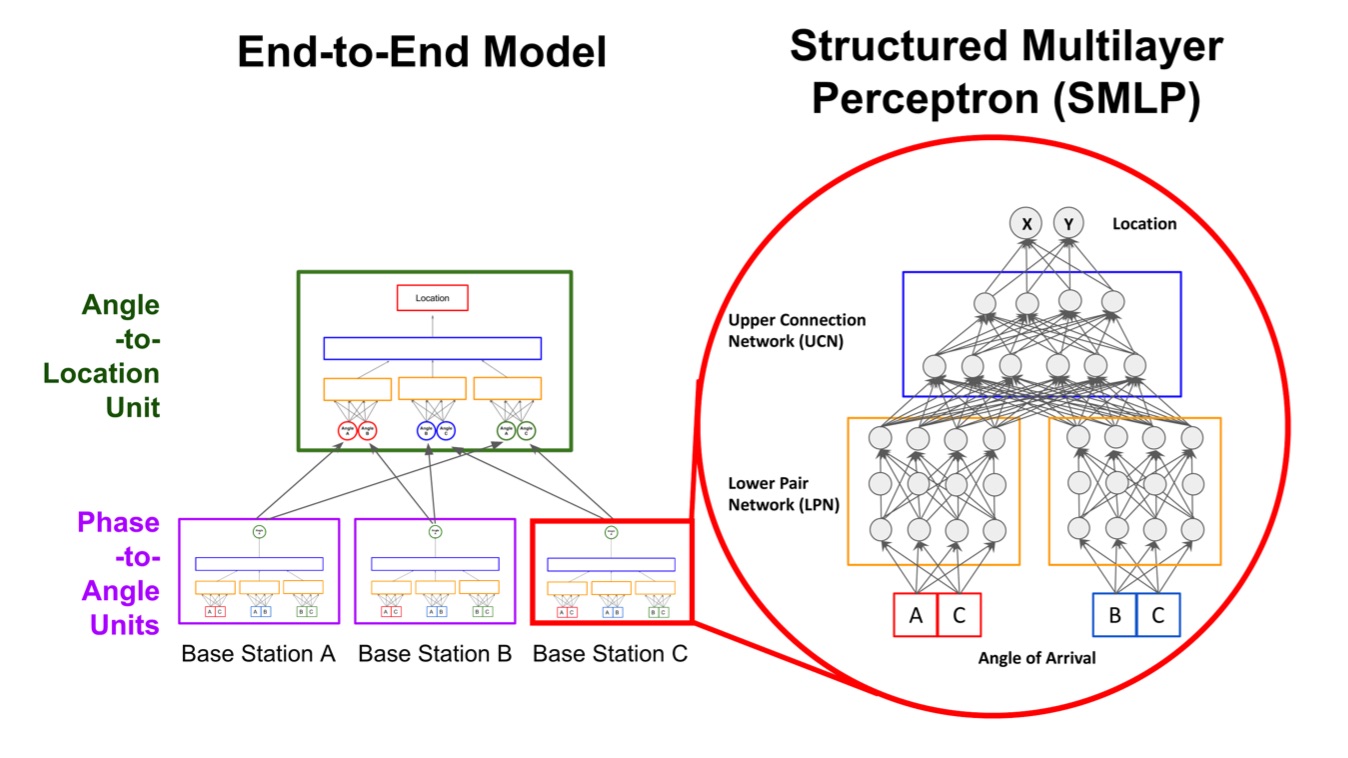

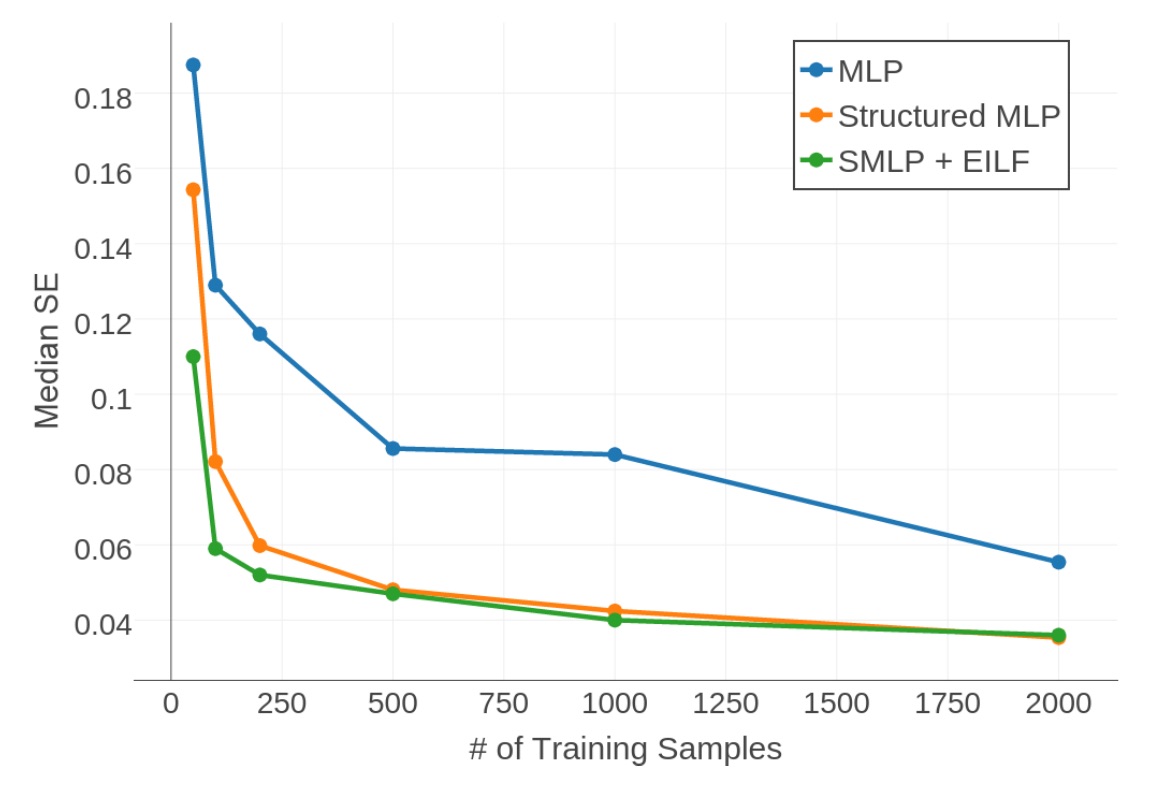

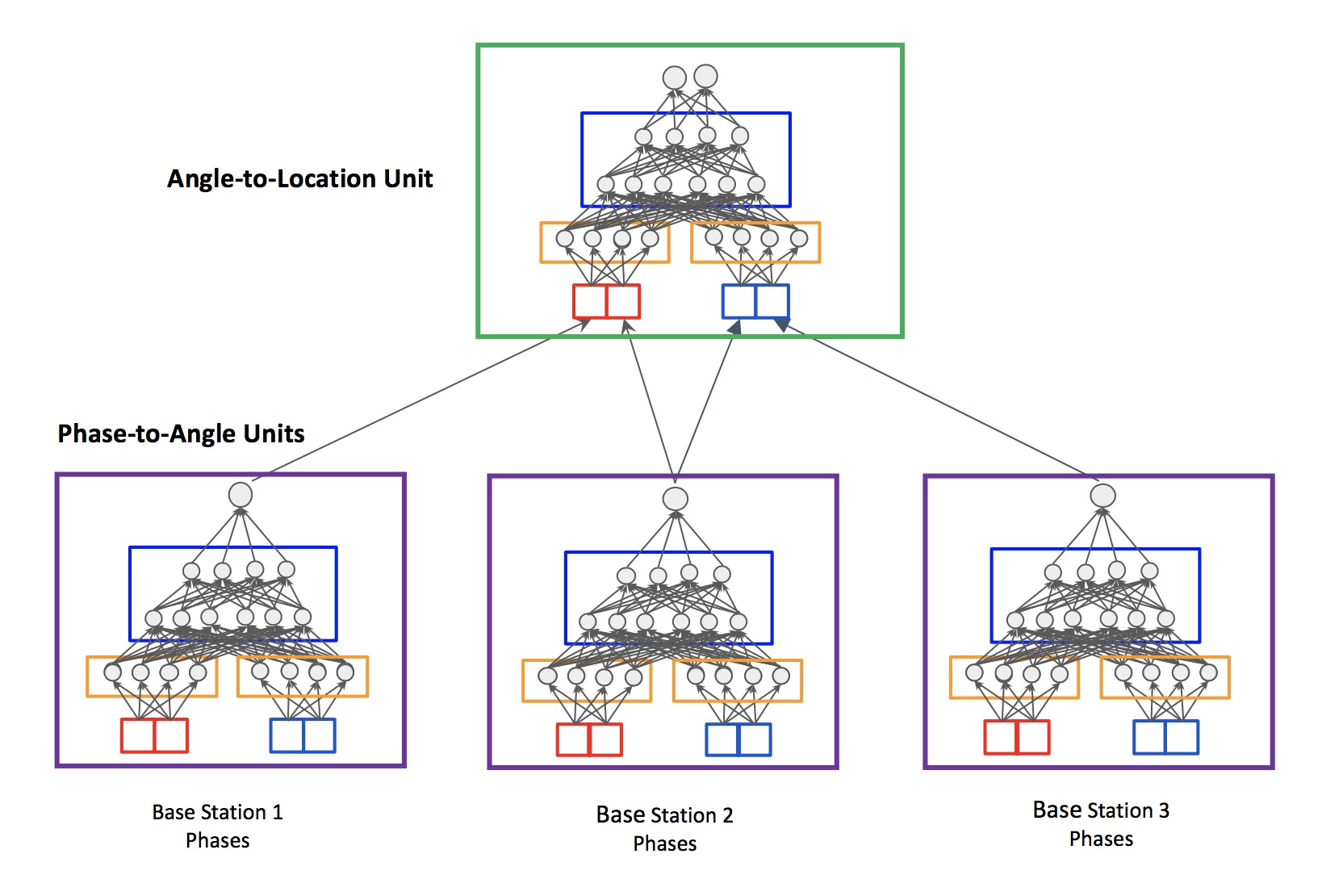

Next-generation wireless networks such as 5G and 802.11ad networks will use millimeter waves operating at 28GHz, 38GHz, or higher frequencies to deliver unprecedentedly high data rates, e.g., 10 gigabits per second. However, millimeter waves must be used directionally with narrow beams in order to overcome the large attenuation due to their higher frequency. To achieve high data rates in a mobile setting, communicating nodes need to align their beams dynamically, quickly, and in high resolution. We propose a data-driven, deep neural network (DNN) approach to provide robust localization for beam alignment, using a lower frequency spectrum (e.g., 2.4 GHz). The proposed DNN-based localization methods use the angle of arrival derived from phase differences in the signal received at multiple antenna arrays to infer the location of a mobile node.

Our methods differ from others that use DNNs as a black box in that the structure of our neural network model is tailored to address difficulties associated with the domain, such as collinearity of the mobile node with antenna arrays, fading and multipath. We show that training our models requires a small number of sample locations, such as 30 or fewer, making the proposed methods practical. Our specific contributions are: (1) a structured DNN approach where the neural network topology reflects the placement of antenna arrays, (2) a simulation platform for generating training and evaluation data sets under multiple noise models, and (3) demonstration that our structured DNN approach improves localization under noise by up to 25% over traditional off-the-shelf DNNs, and can achieve sub-meter accuracy in a real-world experiment.

Comiter MZ, Crouse MB, Kung HT

International Journal of Computer Networks and Communications (IJCNC), May 2017

Next-generation wireless networks such as 5G and 802.11ad networks will use millimeter waves operating at 28GHz, 38GHz, or higher frequencies to deliver unprecedentedly high data rates, e.g., 10 gigabits per second. However, millimeter waves must be used directionally with narrow beams in order to overcome the large attenuation due to their higher frequency. To achieve high data rates in a mobile setting, communicating nodes need to align their beams dynamically, quickly, and in high resolution. We propose a data-driven, deep neural network (DNN) approach to provide robust localization for beam alignment, using a lower frequency spectrum (e.g., 2.4 GHz). The proposed DNN-based localization methods use the angle of arrival derived from phase differences in the signal received at multiple antenna arrays to infer the location of a mobile node.

Our methods differ from others that use DNNs as a black box in that the structure of our neural network model is tailored to address difficulties associated with the domain, such as collinearity of the mobile node with antenna arrays, fading and multipath. We show that training our models requires a small number of sample locations, such as 30 or fewer, making the proposed methods practical. Our specific contributions are: (1) a structured DNN approach where the neural network topology reflects the placement of antenna arrays, (2) a simulation platform for generating training and evaluation data sets under multiple noise models, and (3) demonstration that our structured DNN approach improves localization under noise by up to 25% over traditional off-the-shelf DNNs, and can achieve sub-meter accuracy in a real-world experiment.

A Data-Driven Approach to Localization for High Frequency Wireless Mobile Networks

Comiter MZ, Crouse MB, Kung HT

IEEE Global Communications Conference (GLOBECOM) 2017, December 2017

To achieve high data rates in a mobile setting, next- generation wireless networks such as 5G networks will use high frequency millimeter-wave (mmWave) bands at 28 GHz or higher. Communicating in high frequencies requires directional antennas on base stations to align their beams dynamically, quickly, and in high resolution with mobile nodes. To this end, we propose a data-driven deep neural network (DNN) localization approach using lower frequency spectrum. Our methods require fewer than 30 real-world sample locations to learn a model that, using signals received at multiple antenna arrays from a mobile node, can localize a mobile node to the required 5G indoor sub- meter accuracy.

We demonstrate with real-world data in indoor and outdoor experiments that this performance is achievable in multipath-rich environments. We show via simulation that the proposed DNN approach is robust against noise and collinearity between antenna arrays. A key feature of our approach is that, unlike other methods that use DNNs as a black box, we tailor the structure (topology) of the DNNs to the underlying localization task.

Our primary contributions are: (1) a novel structure for a deep neural network that reflects base station locations, (2) a quantized loss function for neural network training that improves accuracy and reduces the amount of training data needed, and (3) a procedure for generating synthetic data to reduce the required number of real-world measurements needed for training an accurate data-driven localization model. Our real-world experiments show that the use of synthetic data can improve localization accuracy by over 3x.

Comiter MZ, Crouse MB, Kung HT

IEEE Global Communications Conference (GLOBECOM) 2017, December 2017

To achieve high data rates in a mobile setting, next- generation wireless networks such as 5G networks will use high frequency millimeter-wave (mmWave) bands at 28 GHz or higher. Communicating in high frequencies requires directional antennas on base stations to align their beams dynamically, quickly, and in high resolution with mobile nodes. To this end, we propose a data-driven deep neural network (DNN) localization approach using lower frequency spectrum. Our methods require fewer than 30 real-world sample locations to learn a model that, using signals received at multiple antenna arrays from a mobile node, can localize a mobile node to the required 5G indoor sub- meter accuracy.

We demonstrate with real-world data in indoor and outdoor experiments that this performance is achievable in multipath-rich environments. We show via simulation that the proposed DNN approach is robust against noise and collinearity between antenna arrays. A key feature of our approach is that, unlike other methods that use DNNs as a black box, we tailor the structure (topology) of the DNNs to the underlying localization task.

Our primary contributions are: (1) a novel structure for a deep neural network that reflects base station locations, (2) a quantized loss function for neural network training that improves accuracy and reduces the amount of training data needed, and (3) a procedure for generating synthetic data to reduce the required number of real-world measurements needed for training an accurate data-driven localization model. Our real-world experiments show that the use of synthetic data can improve localization accuracy by over 3x.

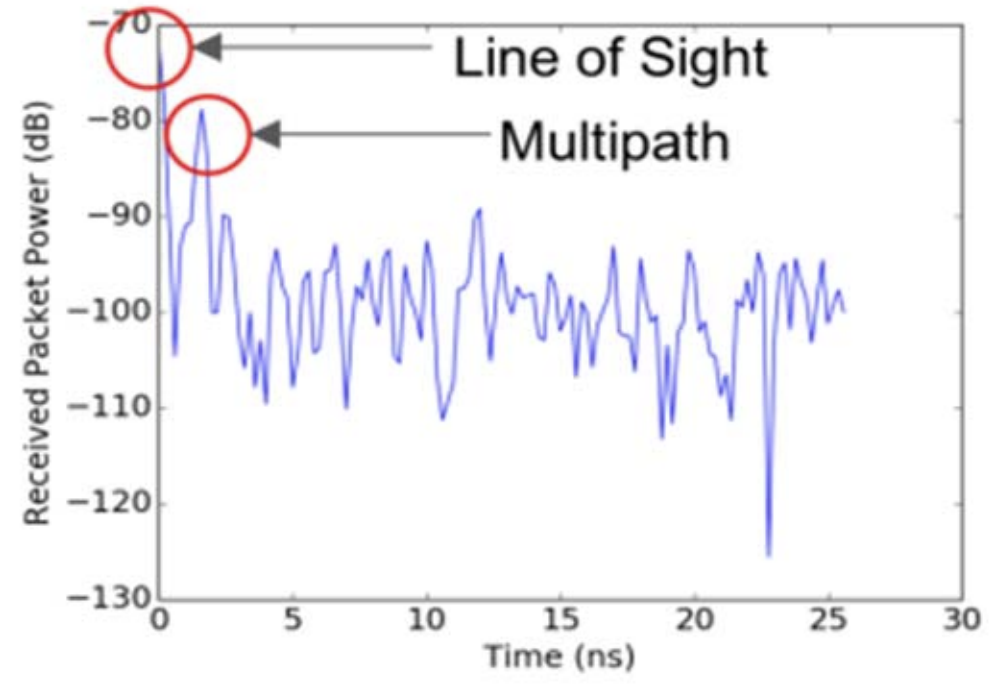

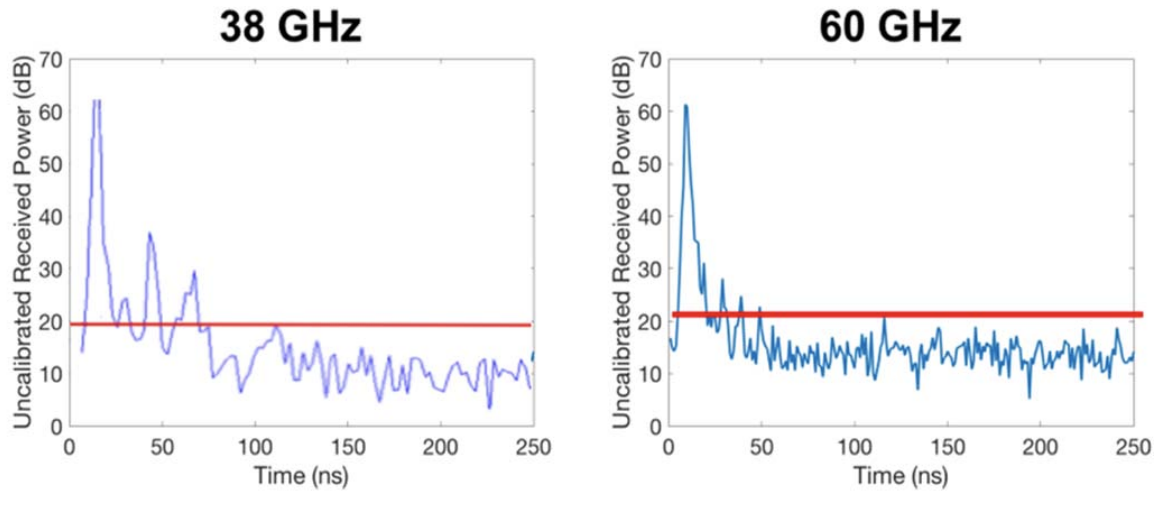

Millimeter-wave Field Experiments with Many Antenna Configurations for Indoor Multipath Environments

Comiter M, Crouse M, Kung HT, Tarng JH, Tsai ZM, Wu WT, Lee TS, Chang MC, Kuan YC

4th International Workshop on 5G/5G+ Communications in Higher Frequency Bands (5GCHFB) in conjunction with IEEE Globecom 2017, December 2017

Next-generation wireless networks, such as 5G networks, will use millimeter waves (mmWaves) to deliver extremely data rates. Due to high attenuation at this higher frequency, use of directional antennas is commonly suggested for mmWave communication. It is therefore important to study how different antenna configurations at the transmitter and receiver effect received power and data throughput. In this paper, we describe field experiments with mmWave antennas for indoor multipath environments and report measurement results on a multitude of antenna configurations. Specifically, we examine four different mmWave systems, operating at two different frequencies (38 and 60 GHz), using a number of different antennas (horn antennas, omnidirectional antennas, and phase arrays).

For each system, we systematically collect performance measurements (e.g., received power), and use these to examine the effects of beam misalignment on signal quality, the presence of multipath effects, and susceptibility to blockage. We capture interesting phenomena, including a multipath scenario in which a single receiver antenna can receive two copies of signals transmitted from the same transmitter antenna over multiple paths. From these field experiments, we discuss lessons learned and draw several conclusions, and their applicability to the design of future mmWave networks.

Comiter M, Crouse M, Kung HT, Tarng JH, Tsai ZM, Wu WT, Lee TS, Chang MC, Kuan YC

4th International Workshop on 5G/5G+ Communications in Higher Frequency Bands (5GCHFB) in conjunction with IEEE Globecom 2017, December 2017

Next-generation wireless networks, such as 5G networks, will use millimeter waves (mmWaves) to deliver extremely data rates. Due to high attenuation at this higher frequency, use of directional antennas is commonly suggested for mmWave communication. It is therefore important to study how different antenna configurations at the transmitter and receiver effect received power and data throughput. In this paper, we describe field experiments with mmWave antennas for indoor multipath environments and report measurement results on a multitude of antenna configurations. Specifically, we examine four different mmWave systems, operating at two different frequencies (38 and 60 GHz), using a number of different antennas (horn antennas, omnidirectional antennas, and phase arrays).

For each system, we systematically collect performance measurements (e.g., received power), and use these to examine the effects of beam misalignment on signal quality, the presence of multipath effects, and susceptibility to blockage. We capture interesting phenomena, including a multipath scenario in which a single receiver antenna can receive two copies of signals transmitted from the same transmitter antenna over multiple paths. From these field experiments, we discuss lessons learned and draw several conclusions, and their applicability to the design of future mmWave networks.

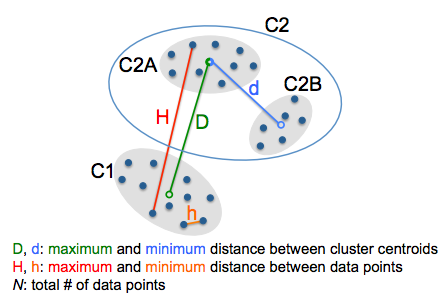

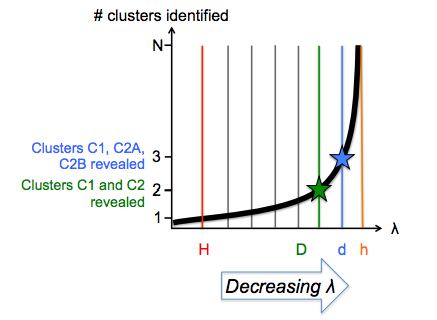

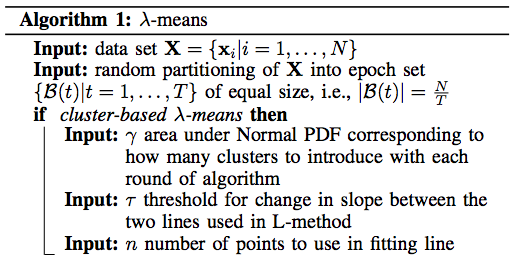

Lambda Means Clustering: Automatic Parameter Search and Distributed Computing Implementation

Comiter M, Cha M, Kung HT, Teerapittayanon S

23rd International Conference on Pattern Recognition (ICPR 2016), December 2016

Recent advances in clustering have shown that ensuring a minimum separation between cluster centroids leads to higher quality clusters compared to those found by methods that explicitly set the number of clusters to be found, such as k-means. One such algorithm is DP-means, which sets a distance parameter λ for the minimum separation. However, without knowing either the true number of clusters or the underlying true distribution, setting λ itself can be difficult, and poor choices in setting λ will negatively impact cluster quality.

As a general solution for finding λ, in this paper we present λ-means, a clustering algorithm capable of deriving an optimal value for λ automatically. We contribute both a theoreticallymotivated cluster-based version of λ-means, as well as a faster conflict-based version of λ-means. We demonstrate that λ-means discovers the true underlying value of λ asymptotically when run on datasets generated by a Dirichlet Process, and achieves competitive performance on real world test datasets. Further, we demonstrate that when run on both parallel multicore computers and distributed cluster computers in the cloud, cluster-based λ- means achieves near perfect speedup, and while being a more efficient algorithm, conflict-based λ-means achieves speedups only a factor of two away from the maximum-possible.

Comiter M, Cha M, Kung HT, Teerapittayanon S

23rd International Conference on Pattern Recognition (ICPR 2016), December 2016

Recent advances in clustering have shown that ensuring a minimum separation between cluster centroids leads to higher quality clusters compared to those found by methods that explicitly set the number of clusters to be found, such as k-means. One such algorithm is DP-means, which sets a distance parameter λ for the minimum separation. However, without knowing either the true number of clusters or the underlying true distribution, setting λ itself can be difficult, and poor choices in setting λ will negatively impact cluster quality.

As a general solution for finding λ, in this paper we present λ-means, a clustering algorithm capable of deriving an optimal value for λ automatically. We contribute both a theoreticallymotivated cluster-based version of λ-means, as well as a faster conflict-based version of λ-means. We demonstrate that λ-means discovers the true underlying value of λ asymptotically when run on datasets generated by a Dirichlet Process, and achieves competitive performance on real world test datasets. Further, we demonstrate that when run on both parallel multicore computers and distributed cluster computers in the cloud, cluster-based λ- means achieves near perfect speedup, and while being a more efficient algorithm, conflict-based λ-means achieves speedups only a factor of two away from the maximum-possible.

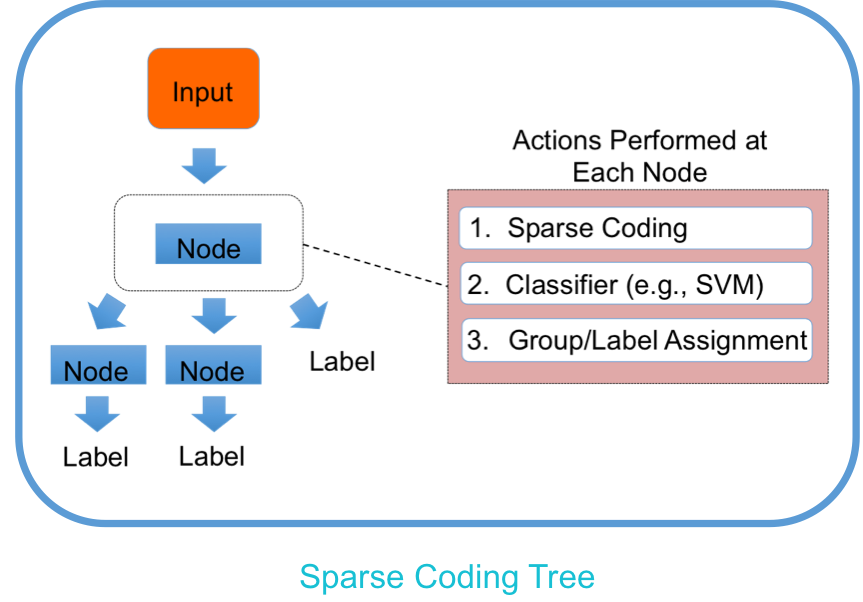

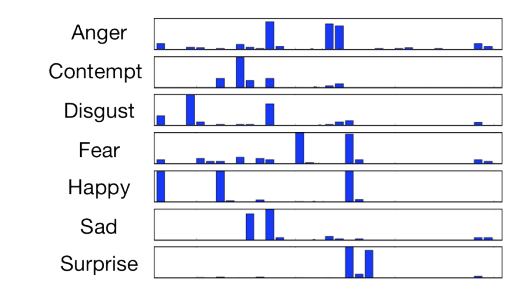

Sparse Coding Trees with Application to Emotion Classification

Best Paper Award, CVPR-AMFG 2015

Chen H-C, Comiter M, Kung HT, McDanel B

IEEE Workshop on Analysis and Modeling of Faces and Gestures (CVPR-AMFG 2015), June 2015

We present Sparse Coding trees (SC-trees), a sparse coding-based framework for resolving misclassifications arising when multiple classes map to a common set of features. SC-trees are novel supervised classification trees that use node-specific dictionaries and classifiers to direct input based on classification results in the feature space at each node. We have applied SC-trees to emotion classification of facial expressions. This paper uses this application to illustrate concepts of SC-trees and how they can achieve high performance in classification tasks. When used in conjunction with a nonnegativity constraint on the sparse codes and a method to exploit facial symmetry, SC-trees achieve results comparable with or exceeding the state-ofthe-art classification performance on a number of realistic and standard datasets.

Best Paper Award, CVPR-AMFG 2015

Chen H-C, Comiter M, Kung HT, McDanel B

IEEE Workshop on Analysis and Modeling of Faces and Gestures (CVPR-AMFG 2015), June 2015

We present Sparse Coding trees (SC-trees), a sparse coding-based framework for resolving misclassifications arising when multiple classes map to a common set of features. SC-trees are novel supervised classification trees that use node-specific dictionaries and classifiers to direct input based on classification results in the feature space at each node. We have applied SC-trees to emotion classification of facial expressions. This paper uses this application to illustrate concepts of SC-trees and how they can achieve high performance in classification tasks. When used in conjunction with a nonnegativity constraint on the sparse codes and a method to exploit facial symmetry, SC-trees achieve results comparable with or exceeding the state-ofthe-art classification performance on a number of realistic and standard datasets.

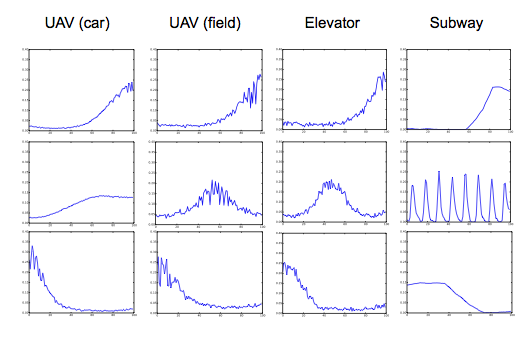

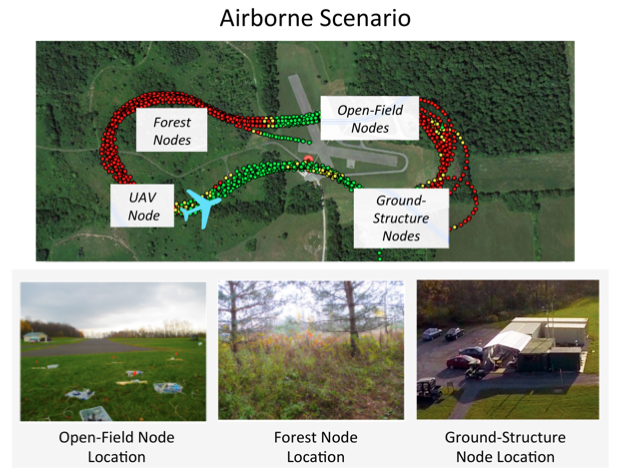

Taming Wireless Fluctuations by Predictive Queuing Using a Sparse-Coding Link-State Model

Tarsa SJ, Comiter M, Crouse M, McDanel B, Kung HT

ACM MobiHoc 2015, June 2015

We introduce State-Informed Link-Layer Queuing (SILQ), a system that models, predicts, and avoids packet delivery failures caused by temporary wireless outages in everyday scenarios. By stabilizing connections in adverse link conditions, SILQ boosts throughput and reduces performance variation for network applications, for example by preventing unnecessary TCP timeouts due to dead zones, elevators, and subway tunnels. SILQ makes predictions in real-time by actively probing links, matching measurements to an overcomplete dictionary of patterns learned offline, and classifying the resulting sparse feature vectors to identify those that precede outages. We use a clustering method called sparse coding to build our data-driven link model, and show that it produces more variation-tolerant predictions than traditional loss-rate, location-based, or Markov chain techniques.

We present extensive data collection and field-validation of SILQ in airborne, indoor, and urban scenarios of practical interest. We show how offline unsupervised learning discovers link-state patterns that are stable across diverse networks and signal-propagation environments. Using these canonical primitives, we train outage predictors for 802.11 (Wi-Fi) and 3G cellular networks to demonstrate TCP throughput gains of 4x with off-the-shelf mobile devices. SILQ addresses delivery failures solely at the link layer, requires no new hardware, and upholds the end-to-end design principle to enable easy integration across applications, devices, and networks.

Tarsa SJ, Comiter M, Crouse M, McDanel B, Kung HT

ACM MobiHoc 2015, June 2015

We introduce State-Informed Link-Layer Queuing (SILQ), a system that models, predicts, and avoids packet delivery failures caused by temporary wireless outages in everyday scenarios. By stabilizing connections in adverse link conditions, SILQ boosts throughput and reduces performance variation for network applications, for example by preventing unnecessary TCP timeouts due to dead zones, elevators, and subway tunnels. SILQ makes predictions in real-time by actively probing links, matching measurements to an overcomplete dictionary of patterns learned offline, and classifying the resulting sparse feature vectors to identify those that precede outages. We use a clustering method called sparse coding to build our data-driven link model, and show that it produces more variation-tolerant predictions than traditional loss-rate, location-based, or Markov chain techniques.

We present extensive data collection and field-validation of SILQ in airborne, indoor, and urban scenarios of practical interest. We show how offline unsupervised learning discovers link-state patterns that are stable across diverse networks and signal-propagation environments. Using these canonical primitives, we train outage predictors for 802.11 (Wi-Fi) and 3G cellular networks to demonstrate TCP throughput gains of 4x with off-the-shelf mobile devices. SILQ addresses delivery failures solely at the link layer, requires no new hardware, and upholds the end-to-end design principle to enable easy integration across applications, devices, and networks.

A Future of Abundant Sparsity: Novel Use and Analysis of Sparse Coding in Machine Learning Applications

Comiter M

Undergraduate Thesis in Computer Science and Statistics, Harvard University

I present novel applications and analysis of the use of sparse coding within the context of machine learning.

Awarded highest honors by both the Harvard Department of Computer Science and Harvard Department of Statistics.

Comiter M

Undergraduate Thesis in Computer Science and Statistics, Harvard University

I present novel applications and analysis of the use of sparse coding within the context of machine learning.

Awarded highest honors by both the Harvard Department of Computer Science and Harvard Department of Statistics.

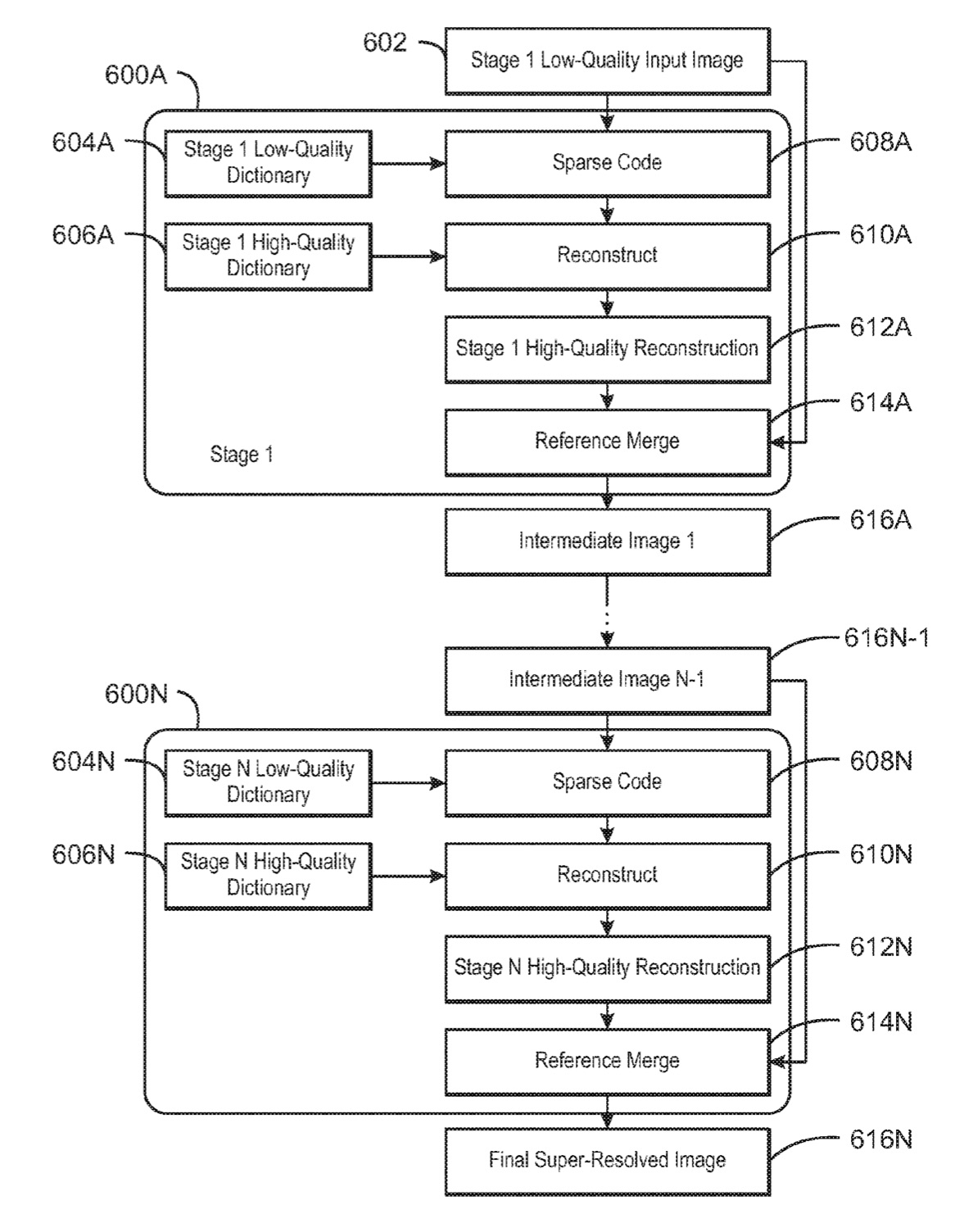

Multi-stage Image Super-resolution with Reference Merging Using Personalized Dictionaries

Lin CK, Comiter MZ, Gulati H, Chinya GN

United States Patent US 9697584 B1, Issued July 2017

An apparatus for multi-stage super-resolution is described herein. The apparatus includes a personalized dictionary, a plurality of super-resolution stages, and a reference merger. Each of the plurality of super-resolution stages correspond to at least one personalized dictionary, and the personalized dictionary is applied to an input image that is sparse-coded to generate a reconstructed image. The reference merger is to merge the reconstructed image and the input image to generate a super-resolved image for each stage.

Lin CK, Comiter MZ, Gulati H, Chinya GN

United States Patent US 9697584 B1, Issued July 2017

An apparatus for multi-stage super-resolution is described herein. The apparatus includes a personalized dictionary, a plurality of super-resolution stages, and a reference merger. Each of the plurality of super-resolution stages correspond to at least one personalized dictionary, and the personalized dictionary is applied to an input image that is sparse-coded to generate a reconstructed image. The reference merger is to merge the reconstructed image and the input image to generate a super-resolved image for each stage.

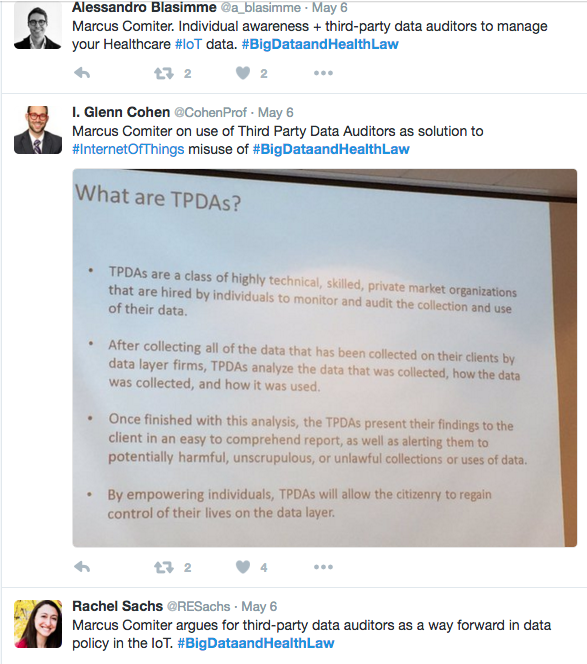

Data Policy for Internet of Things Healthcare Devices: Aligning Patient, Industry, and Privacy Goals in the Age of Big Data

Comiter M

Conference on Big Data, Health Law, and Bioethics, The Petrie-Flom Center at Harvard Law School, May 2016

Preventative healthcare aimed at reducing the incidence of chronic diseases will be a hallmark of twenty-first century healthcare systems. A key enabler of these preventative techniques will be the Internet of Things (IoT), an emerging technological paradigm connecting millions of personal healthcare devices, such as wearable heart rate monitors and activity trackers, to the Internet, allowing an unprecedented scale and granularity of biomedical data collection. In contrast to the highly specific and sensitive health data that traditionally characterizes other sources of healthcare big data, such as in electronic medical records (EMRs), these IoT devices are largely consumer facing devices whose data represent new modalities, for example the continuous monitoring of less sensitive data such as sleep duration, activity levels, diet, and heart rate.

In this paper, I argue that the data collected from IoT healthcare devices is fundamentally different in nature from traditional sources of healthcare data, such as medical records, and far more similar to data characterized by the development of the Internet. Through this lens, I assert a number of principles that data regulatory policy for the healthcare data from the IoT should enshrine. I propose the concept of “Third Party Data Auditors” (TPDAs) to embed these principles within a regulatory regime of the healthcare IoT. TPDAs are specialized, highly technical third party actors hired by individuals to audit the use of their data by data owners such as corporations and data brokers. Through this market and innovation-based approach, I argue that TPDAs can be an effective first step in regulating an increasingly data driven healthcare system, and lay the groundwork for a future of responsible data use. In justifying this policy regime, I take a comparative approach to demonstrate that its efforts to align the incentives of market participants closely resembles the policy regime employed to govern consumer data on the Internet. Finally, I describe a realistic policy regime in line with existing thought that will encourage the creation of TPDAs. I argue that together, TPDAs and the associated policy regime will embed fundamental accountability and transparency measures into a data driven healthcare system. By building in the needed accountability and transparency mechanisms from the beginning of the field using newly available technologies for tagging and tracking data, TPDAs will allow for innovation to flourish by allowing the broad use of data without the dangers of a runaway system.

Comiter M

Conference on Big Data, Health Law, and Bioethics, The Petrie-Flom Center at Harvard Law School, May 2016

Preventative healthcare aimed at reducing the incidence of chronic diseases will be a hallmark of twenty-first century healthcare systems. A key enabler of these preventative techniques will be the Internet of Things (IoT), an emerging technological paradigm connecting millions of personal healthcare devices, such as wearable heart rate monitors and activity trackers, to the Internet, allowing an unprecedented scale and granularity of biomedical data collection. In contrast to the highly specific and sensitive health data that traditionally characterizes other sources of healthcare big data, such as in electronic medical records (EMRs), these IoT devices are largely consumer facing devices whose data represent new modalities, for example the continuous monitoring of less sensitive data such as sleep duration, activity levels, diet, and heart rate.

In this paper, I argue that the data collected from IoT healthcare devices is fundamentally different in nature from traditional sources of healthcare data, such as medical records, and far more similar to data characterized by the development of the Internet. Through this lens, I assert a number of principles that data regulatory policy for the healthcare data from the IoT should enshrine. I propose the concept of “Third Party Data Auditors” (TPDAs) to embed these principles within a regulatory regime of the healthcare IoT. TPDAs are specialized, highly technical third party actors hired by individuals to audit the use of their data by data owners such as corporations and data brokers. Through this market and innovation-based approach, I argue that TPDAs can be an effective first step in regulating an increasingly data driven healthcare system, and lay the groundwork for a future of responsible data use. In justifying this policy regime, I take a comparative approach to demonstrate that its efforts to align the incentives of market participants closely resembles the policy regime employed to govern consumer data on the Internet. Finally, I describe a realistic policy regime in line with existing thought that will encourage the creation of TPDAs. I argue that together, TPDAs and the associated policy regime will embed fundamental accountability and transparency measures into a data driven healthcare system. By building in the needed accountability and transparency mechanisms from the beginning of the field using newly available technologies for tagging and tracking data, TPDAs will allow for innovation to flourish by allowing the broad use of data without the dangers of a runaway system.

Algorithmic Allegories

See New Mega-Hit Case Study on Tech Ethics for press coverage

Marcus Comiter, Ben Sobel, Jonathan Zittrain

Harvard Law School Case Study, May 2016

Algorithmic Allegories chronicles the Proceedings of the National Academy of Sciences emotion contagion study controversy and the legal and policy issues it introduced, as well as offering six related hypotheticals to probe the moral, legal, and technical implications of algorithms in modern society. By considering the use of algorithms in print media, charity, business, and other situations, the case study focuses on the feasibility of implementing policy in a rapidly changing, technology-powered landscape and appreciate the role of algorithms in modern society and the responsibility that accompanies their use.

See New Mega-Hit Case Study on Tech Ethics for press coverage

Marcus Comiter, Ben Sobel, Jonathan Zittrain

Harvard Law School Case Study, May 2016

Algorithmic Allegories chronicles the Proceedings of the National Academy of Sciences emotion contagion study controversy and the legal and policy issues it introduced, as well as offering six related hypotheticals to probe the moral, legal, and technical implications of algorithms in modern society. By considering the use of algorithms in print media, charity, business, and other situations, the case study focuses on the feasibility of implementing policy in a rapidly changing, technology-powered landscape and appreciate the role of algorithms in modern society and the responsibility that accompanies their use.

Chapter 10: Data Policy for Internet of Things Healthcare Devices: Aligning Patient, Industry, and Privacy Goals in the Age of Big Data

Available for purchase on Amazon, Cambridge Press, and Barnes and Noble

Marcus Comiter

Volume Editors: I. Glenn Cohen, Holly Fernandez Lynch, Effy Vayena, Urs Gasser

Cambridge University Press, 2018

Author of Chapter 10: "Data Policy for Internet of Things Healthcare Devices: Aligning Patient, Industry, and Privacy Goals in the Age of Big Data" in book Big Data, Health Law, and Bioethics, published by Cambridge University Press in March 2018.

Chapter proposes and explains "Third Party Data Auditors" (TPDAs) as a regulatory regime of the healthcare IoT. Published in concert with Conference on Big Data, Health Law, and Bioethics, The Petrie-Flom Center at Harvard Law School.

Available for purchase on Amazon, Cambridge Press, and Barnes and Noble

Marcus Comiter

Volume Editors: I. Glenn Cohen, Holly Fernandez Lynch, Effy Vayena, Urs Gasser

Cambridge University Press, 2018

Author of Chapter 10: "Data Policy for Internet of Things Healthcare Devices: Aligning Patient, Industry, and Privacy Goals in the Age of Big Data" in book Big Data, Health Law, and Bioethics, published by Cambridge University Press in March 2018.

Chapter proposes and explains "Third Party Data Auditors" (TPDAs) as a regulatory regime of the healthcare IoT. Published in concert with Conference on Big Data, Health Law, and Bioethics, The Petrie-Flom Center at Harvard Law School.

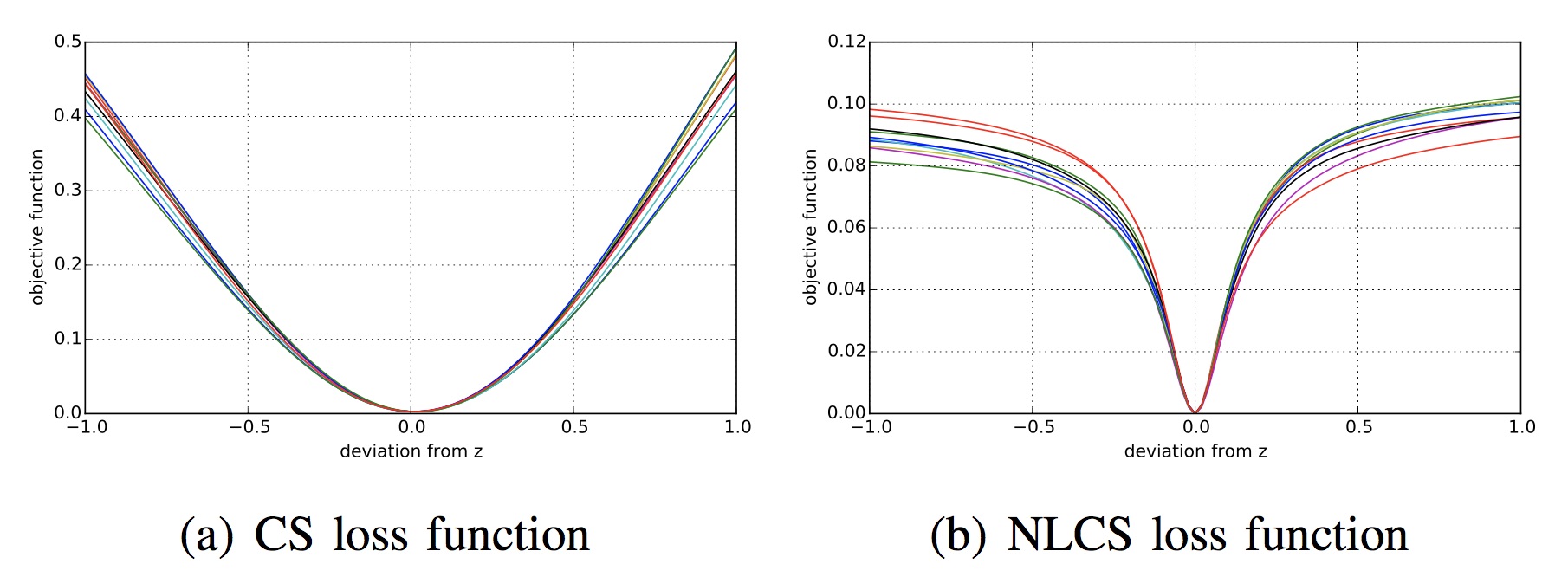

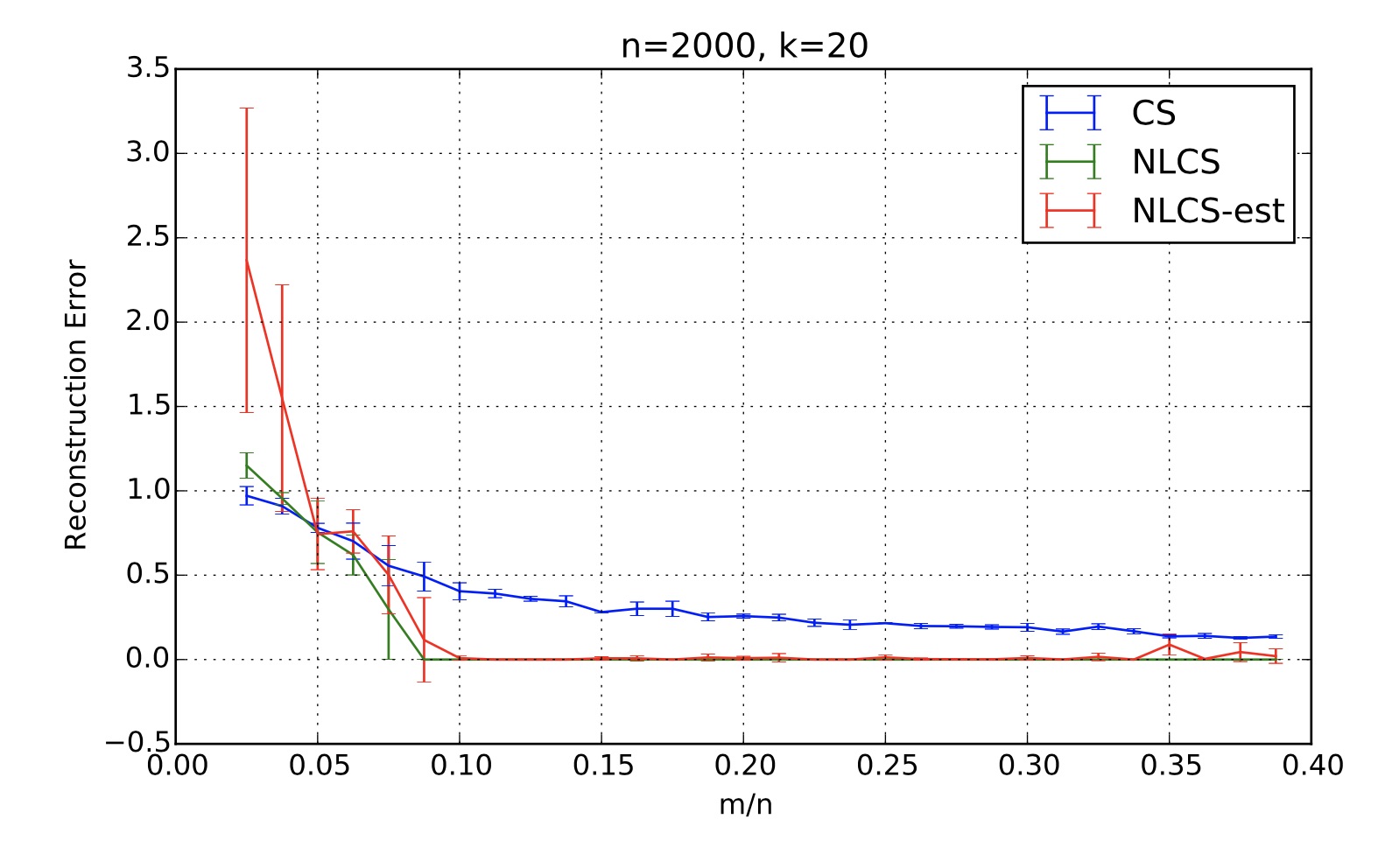

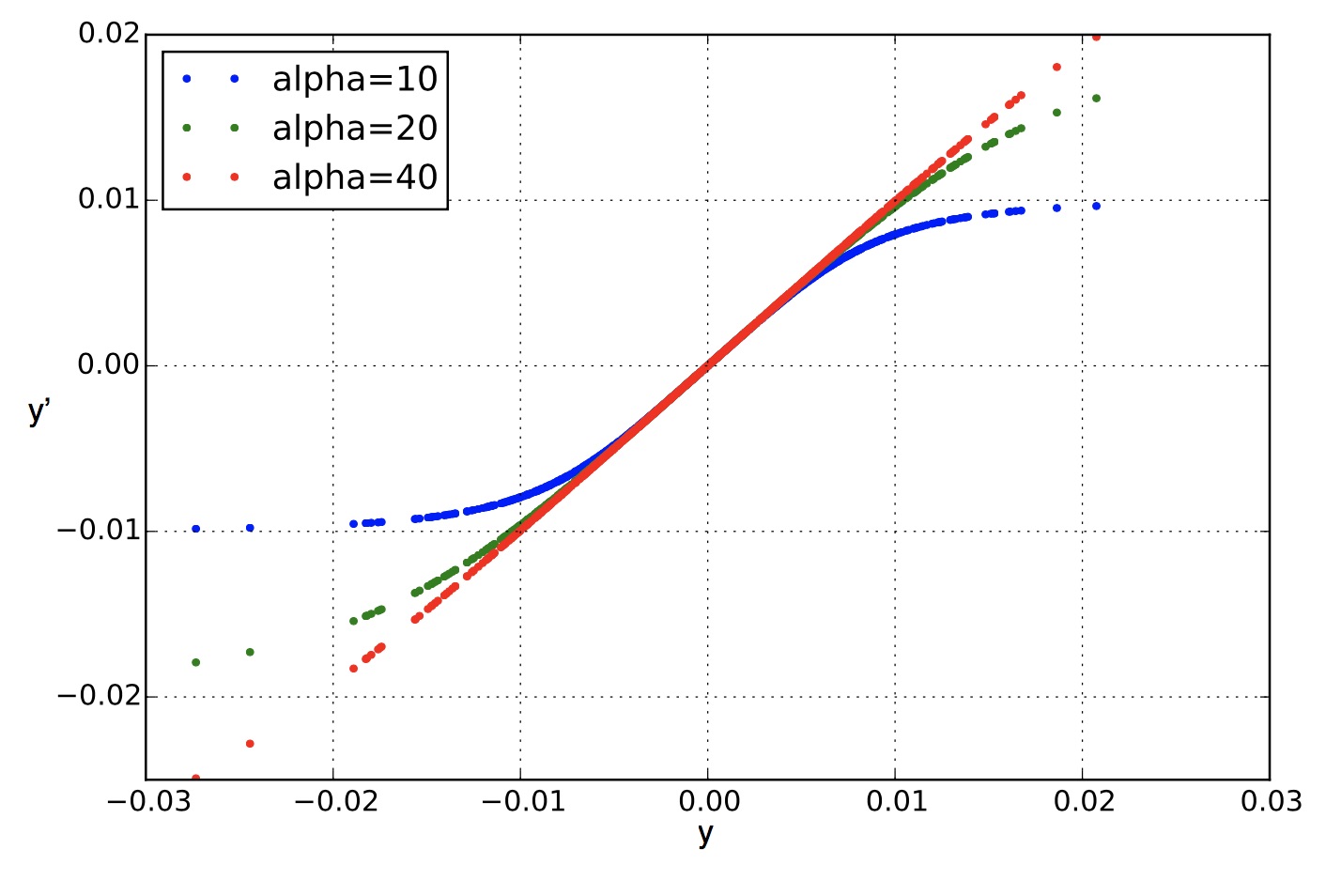

Nonlinear Compressive Sensing for Distorted Measurements and Application to Improving Efficiency of Power Amplifiers

Chen H-C, Kung HT, Comiter M

IEEE International Conference on Communications (ICC 2017), May 2017

Compressive sensing, which enables signal recovery from fewer samples than traditional sampling theory dictates, assumes that the sampling process is linear. However, this linearity assumption may not hold in the analog domain without significant trade-offs, such as power amplifiers sacrificing substantial power efficiency in exchange for producing linear outputs. Since compressive sensing is most impactful when implemented in the analog domain, it is of interest to integrate the nonlinearity in compressive measurements into the signal recovery process such that nonlinear effects can be mitigated. As such, in this paper, we describe a nonlinear compressive sensing formulation and associated signal recovery algorithms, providing both compression and improved efficiency of a power amplifier simultaneously with one procedure. We present evaluations of the proposed framework using both measurements from real power amplifiers and simulations.

Chen H-C, Kung HT, Comiter M

IEEE International Conference on Communications (ICC 2017), May 2017

Compressive sensing, which enables signal recovery from fewer samples than traditional sampling theory dictates, assumes that the sampling process is linear. However, this linearity assumption may not hold in the analog domain without significant trade-offs, such as power amplifiers sacrificing substantial power efficiency in exchange for producing linear outputs. Since compressive sensing is most impactful when implemented in the analog domain, it is of interest to integrate the nonlinearity in compressive measurements into the signal recovery process such that nonlinear effects can be mitigated. As such, in this paper, we describe a nonlinear compressive sensing formulation and associated signal recovery algorithms, providing both compression and improved efficiency of a power amplifier simultaneously with one procedure. We present evaluations of the proposed framework using both measurements from real power amplifiers and simulations.

New Paradigms for Distributed Computing on Secure Next Generation Networks

Comiter M, Kung HT, Teerapittayanon S

National Science Foundation (NSF) Applications and Services in the Year 2021 Workshop, January 2016

Recent advances in high speed, low latency networks and new computer networking paradigms such as SDN and fog computing will enable opportunities for new developments in distributed computing, the Internet of Things, and computing paradigms to support a new generation of applications. In this whitepaper, we outline a number of challenges and opportunities enabled by these developments, including Optimized Compute via Network Programmability, a new paradigm we term Progressive Computing via Multi-scale Virtual Networks, and New Paradigms for Security and Privacy via Virtualization.

Comiter M, Kung HT, Teerapittayanon S

National Science Foundation (NSF) Applications and Services in the Year 2021 Workshop, January 2016

Recent advances in high speed, low latency networks and new computer networking paradigms such as SDN and fog computing will enable opportunities for new developments in distributed computing, the Internet of Things, and computing paradigms to support a new generation of applications. In this whitepaper, we outline a number of challenges and opportunities enabled by these developments, including Optimized Compute via Network Programmability, a new paradigm we term Progressive Computing via Multi-scale Virtual Networks, and New Paradigms for Security and Privacy via Virtualization.

Introductory R

www.introductoryR.com

This is a brief introduction to R and important topics in programming to help readers get accustomed to programing and statistical paradigms and terminology. The chapters are purposely brief so that readers can quickly learn these basic topics and then be able to comprehend and better leverage other R manuals and resources. Released free online at www.introductoryR.com under a public license.

www.introductoryR.com

This is a brief introduction to R and important topics in programming to help readers get accustomed to programing and statistical paradigms and terminology. The chapters are purposely brief so that readers can quickly learn these basic topics and then be able to comprehend and better leverage other R manuals and resources. Released free online at www.introductoryR.com under a public license.